What Is Content Moderator? Explained Clearly

Online platforms wouldn’t function safely without people reviewing what users post every second. From social media feeds to eCommerce reviews and video platforms, someone must ensure content meets community rules and legal standards. That professional is called a content moderator.

In this guide, you’ll learn what a content moderator does, how the role works, required skills, tools, salary ranges, challenges, and career growth. Whether you’re exploring this career or building a platform that needs moderation, this article will give you a clear, practical understanding.

What Is a Content Moderator?

A content moderator is a professional responsible for reviewing, filtering, and managing user-generated content to ensure it complies with platform guidelines, legal requirements, and safety standards.

Their main goal is to keep online spaces safe, respectful, and trustworthy by removing harmful, misleading, or offensive content before it reaches a wider audience.

Why Content Moderation Is So Important

Without moderation, online platforms quickly become unsafe or unreliable. A content moderator helps to:

- Protect users from harmful or illegal material

- Maintain brand reputation

- Prevent spam and scams

- Ensure community guidelines are followed

- Create a positive user experience

With billions of posts created daily, this role has become essential for digital businesses and communities worldwide.

Types of Content Moderation

Pre-Moderation

All content is reviewed before it appears publicly. This method is common in education platforms and child-friendly communities.

Post-Moderation

Content is published immediately, then reviewed later. Harmful posts are removed if they violate rules.

Reactive Moderation

Content is reviewed only when users report it.

Automated Moderation

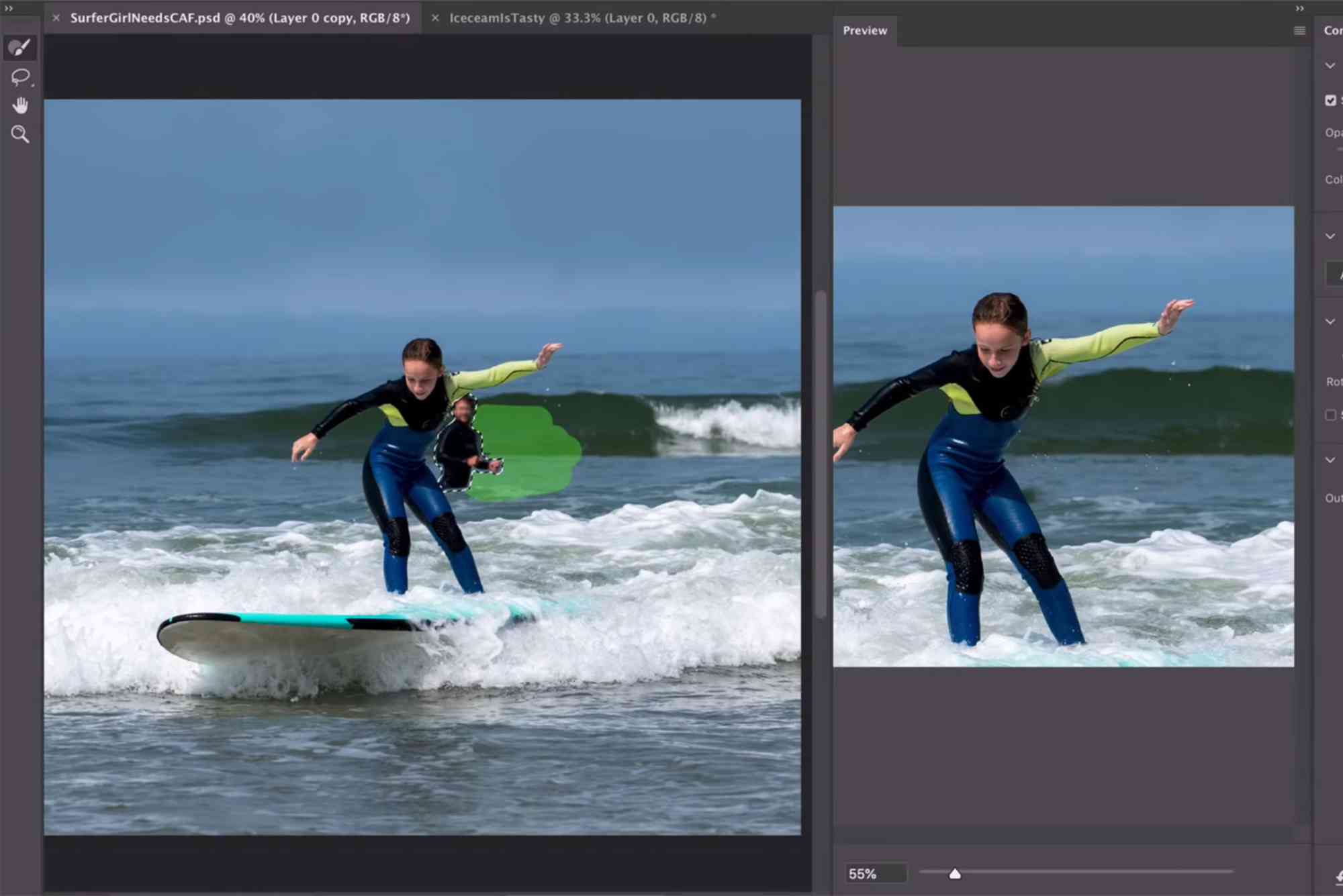

AI tools filter content automatically using keywords, images, and behavioral patterns.

Hybrid Moderation

Combines AI tools with human review for accuracy and fairness. Most large platforms use this model.

Key Responsibilities of a Content Moderator

A content moderator’s daily tasks may include:

- Reviewing text, images, videos, and comments

- Flagging or removing policy-violating content

- Enforcing community guidelines consistently

- Handling user reports and appeals

- Categorizing and labeling content

- Escalating serious cases (violence, threats, exploitation)

- Working with legal and trust & safety teams

Skills Required to Become a Content Moderator

Strong Attention to Detail

Spotting subtle guideline violations requires focus and accuracy.

Emotional Resilience

Moderators may see disturbing content. Mental strength is essential.

Critical Thinking

Not all cases are clear. You must apply rules fairly.

Communication Skills

Clear documentation and teamwork are part of the job.

Tech Savvy

You’ll use moderation dashboards, AI tools, and reporting systems daily.

Tools Used by Content Moderators

- AI filtering tools (automated flagging)

- Content review dashboards

- Ticketing systems

- Keyword detection software

- Image and video recognition tools

- Analytics & reporting platforms

Content Moderator Salary and Career Outlook

Salaries vary by country, experience, and company:

- Entry Level: $25,000–$35,000 per year

- Mid-Level: $40,000–$55,000 per year

- Senior/Lead: $60,000+ per year

With growing digital platforms, demand for content moderators continues to rise. Career paths include Trust & Safety Manager, Community Manager, and Policy Analyst.

Ethical and Mental Health Challenges

A content moderator may face:

- Exposure to disturbing content

- Emotional burnout

- Decision fatigue

- Bias concerns

Many companies now provide counseling and wellness support to protect moderators’ mental health.

How Businesses Benefit from Content Moderation

A skilled content moderator helps businesses by:

- Protecting brand trust

- Reducing legal risks

- Increasing user engagement

- Creating safer communities

If you’re running a digital platform, professional moderation is not optional—it’s essential.

For expert digital growth strategies, consider SEO Expert Help to strengthen your online presence.

Content Moderation vs. Content Marketing

While moderation protects platforms, marketing builds audiences. For deeper insight, read Neil Patel on Content Marketing to understand how quality content attracts users ethically.

How to Become a Content Moderator

Gain Basic Digital Skills

Learn online safety rules, platform policies, and communication basics.

Apply for Entry-Level Roles

Many companies offer remote moderation positions.

Complete Training

You’ll learn policies, tools, and review methods.

Advance Your Career

Move into leadership or trust & safety roles.

FAQs

What does a content moderator do daily?

They review user posts, remove violations, handle reports, and enforce platform rules.

Is content moderation a stressful job?

It can be emotionally demanding, but companies now offer wellness programs.

Can content moderators work from home?

Yes, many companies offer remote moderation roles.

What qualifications are needed?

No formal degree is required, but communication and digital skills help.

Is content moderation a good career?

Yes, it offers growth, stability, and global opportunities.

Why Content Moderation Matters

A content moderator plays a vital role in shaping safe and trustworthy online spaces. As digital platforms grow, the demand for skilled moderators continues to rise. Whether you’re considering this career or managing an online community, understanding content moderation is key to long-term success.